SML Text Classification

Functional Framework using Naïve Bayes and MapReduce

- What I built: A robust text classification framework from scratch in Standard ML.

- Why it matters: Demonstrates how functional programming paradigms (immutability, higher-order functions) apply to data science tasks.

- Proof: Implemented a reusable "Extract-Combine" pattern that mimics MapReduce for parallel data processing.

Problem / Goal

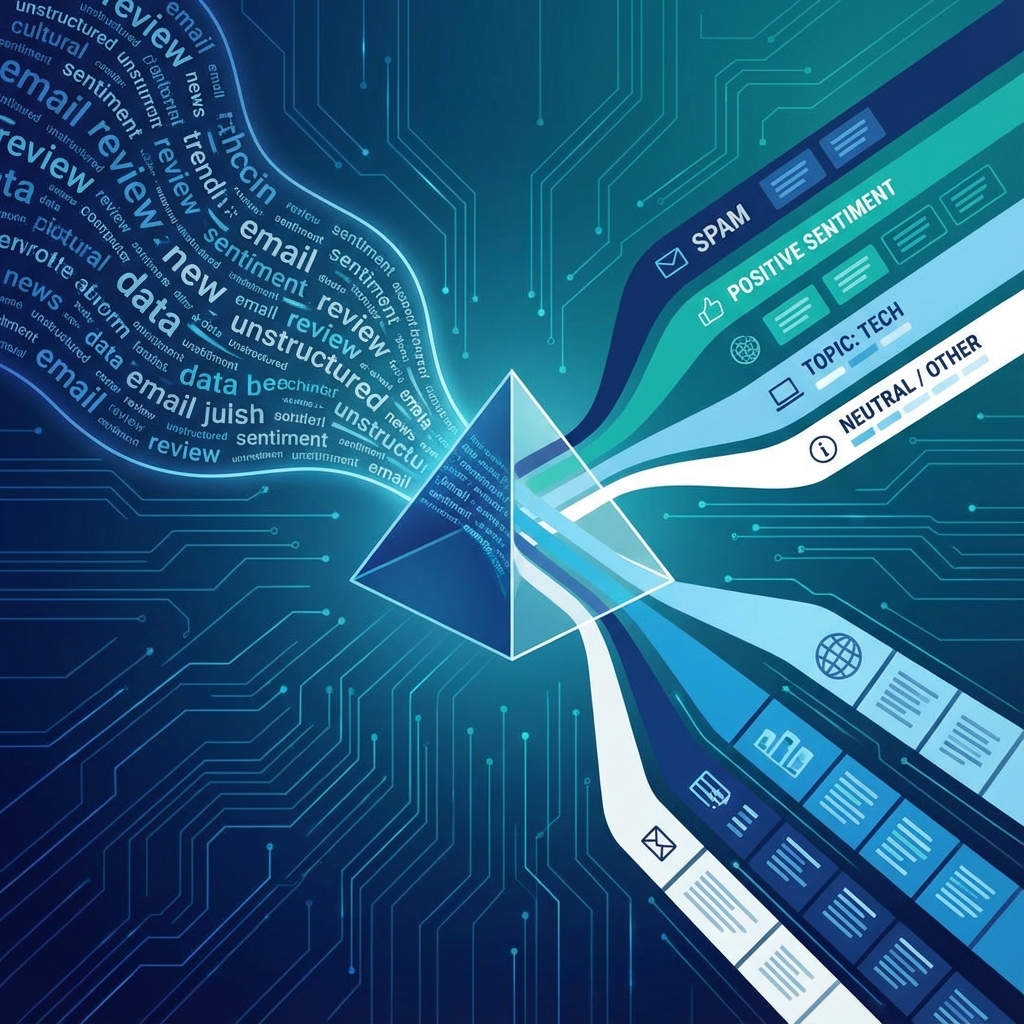

Data processing tasks are typically associated with imperative languages (like Python or C++). The goal of this project was to implement a rigorous text classification system using Standard ML, leveraging functional programming paradigms like higher-order functions and immutable data structures.

At its core, the framework needed to provide a functional analogue to MapReduce to efficiently process large document sets and train a Naïve Bayes classifier.

My Contribution

I engineered the core functional abstractions:

- Extract-Combine Pattern: Developed a higher-order function that maps inputs to key-value pairs (extract) and reduces them (combine), enabling modular parallelization.

- Naïve Bayes Logic: Implemented the statistical classifier to probability distributions of document categories.

- Modular Architecture: Designed the system to separate the parallel execution engine from the specific classification logic, allowing plug-and-play components.

Technical Approach

1. The MapReduce Abstraction (Extract-Combine)

The `extractcombine` function is the engine of the framework. It handles the dictionary management and collision resolution entirely through recursion and pattern matching.

fun extractcombine (compare_fn, extractor_fn, combiner_fn, dataset) =

let

(* Maps key-values into the dictionary, combining collisions *)

fun combinePair (dict, (key, value)) =

case Dict.lookup (compare_fn, dict, key) of

NONE => Dict.insert (compare_fn, dict, (key, value))

| SOME existing =>

Dict.insert (compare_fn, dict, (key, combiner_fn (existing, value)))

in

(* ... Implementation details omitted for brevity ... *)

combined_result

end2. Declarative Training Pipeline

Training the classifier becomes a concise series of functional transformations. Here, we count total words per category by mapping each document to a (Category, WordCount) pair and summing them.

(* Calculate the total number of words in each category *)

val countWordsPerCategory =

ExtractCombine.extractcombine (

String.compare,

(* Extract: Map each document to (Category, WordCount) *)

(fn (category, doc) => Seq.singleton (category, Seq.length doc)),

(* Combine: Sum WordCounts for the same Category *)

(fn (x, y) => x + y),

train)Validation / Results

The framework successfully classifies text documents with high accuracy on standard datasets. More importantly, the modular design allows exact same `extractcombine` engine to be used for unrelated tasks (like log analysis or word counting) without modification.